Update: This post (and the bug report Joachim Breitner submitted in succession) resulted in the change of the haskell documentation: The statement “At the moment, -O2 is unlikely to produce better code than” was removed from the documentation two months after this post. A denser and cleaner representation of the data and the main arguments is given in my bachelor thesis (written in german) that I finished around the same time.

TL;DR: There’s no significant difference between the GHC versions 7.* and 8.0.1-alpha regarding the Haskell code used in the benchmarking game. And there might be an improvement of the performance of idiomatic Haskell code from GHC 7.0.1 to GHC 8.0.1-alpha.

I’m currently building a new benchmarking tool called temci as part of my bachelor thesis.

In search for good programs to benchmark for the evaluation part, I stumbled across the benchmarking game. This blog posts is about using parts of the benchmarking game and temci to measure and compare the performance of the following GHC versions: 7.0.1, 7.2.1, 7.4.1, 7.6.1, 7.8.1, 7.10.1 and 8.0.0 (the version from mid January, called 8.0.1 here for brevity) using different optimization levels (“-O”, “-O2” and “-Odph”, see: Haskell documentation) .

Related work

Johannes Waldman also compared several GHC versions (including some 6.* versions) using the nofib benchmarking suite: http://www.imn.htwk-leipzig.de/~waldmann/etc/nofib/comparison-k.text

(I didn’t include some 6.* versions because I was unable to install them. It would be cool if some one knows how to install older GHC versions than 7.0.1 on Ubuntu and shows me how to do it. So another version of this blog post could include them.)

Temci and game.py

Temci is a essentially a framework wrapping other benchmarking tools (perf stat, SPEC or time). It adds some cool features like assembler randomization or cpuset management. It helps to benchmark programs easily and to compare these benchmarks afterwards. Temci is designed to be extended and built upon with ease. The benchmarks in this blog post are produced with the game.py file that is modeled after the original benchmarks game and uses temci to do the actual measurements.

The benchmarked Haskell code comes from the benchmarks game (with some small modifications). Not all Haskell files are used, as many didn’t compile properly in all GHC versions or run almost infinitely (with their smallest input). I fixed simple errors but learning advanced Haskell just to fix the non trivial bugs is not in the scope of my bachelor thesis.

The aim of game.py is to provide a tool to compare different implementations of the same language by compiling several programs in several categories and running them with different inputs in reproducible manner.

Setup

The benchmarking results were obtained on an Ubuntu 15.10 Linux (with ecrypt as its file system and unity as its desktop environment) running with root privileges on a computer with 8GB DDR3 RAM, a 120 GB SSD (mostly empty), all temporary files (compilation results, …) in the RAM (via tmpfs) and a AMD FX™ 4100 x86_64 CPU. The CPU has 4 cores, 3 of them were available almost exclusively to the benchmarked program. This computer is just used for benchmarking purposes.

The whole benchmarking took around 4 days (with 20 iterations each).

What’s a benchmarked program? It’s the term here for the actually benchmarked command. It depends on the used compiler, the actual Haskell code and the input for the resulting program (if the programs resulting performance is important).

Important note: As the benchmarked code comes from the benchmarks game, the code might not have the best functional style and it doesn’t use lazy evaluation much, …. The benchmarking result can only give of GHC’s performance. If you distrust my benchmarking results, make the measurements yourself and add other algorithms to benchmark. The code is on github, please write an issue at github if you encounter any problems.

Used temci plugins

The following temci plugins were used to improve the benchmarking results (i.e. to reduce the variance and increase the reproducibility).

Discard blocks

The first 5 benchmarkings (one benchmarking block) are dismissed to prefill the cpu and file system caches.

Nice and other_nice

The process priority of the benchmarked process is increased and the priority of most other processes is decreased.

Stop Start

Many processes that are non vital to the system are stopped via a Unix signal, especially the ssh processes and most child processes of the window manager (e.g. firefox, …).

CPU sets

The benchmarked process has its own cpu set and can use 3 CPUs almost exclusively.

Mean scores (and other statistical stuff)

The following discussion of the benchmarking results uses several statistical terms that are explained here to prevent misunderstandings.

Std or standard deviation

A statistical property that describes the variance of a given set of values. It’s an important quality measure for the benchmarking. To quote Gernot Heiser’s list of systems benchmarking crimes:

Always do several runs, and check the standard deviation. Watch out for abnormal variance. In the sort of measurements we do, standard deviations are normally expected to be less than 0.1%. If you see >1% this should ring alarm bells.

Geometric standard deviation

The standard deviation of geometric mean. Subtract 1 to get a rough equivalent to the “normal” standard deviation

Mean score or mean / best mean

This property is the division of the mean of the timings of the benchmarked program through the lowest (best) mean of the benchmarked program over all GHC versions. This gives the performance of each implementation relative to the “perfect” GHC compiler.

Mean score Relative to the FIRst or mean / mean of the first implementation

This property is the mean of the timing of the benchmarked program for a specific implementation relative to the first implementation (it’s GHC 7.0.1 here).

Most plots in this blog post show the mean score distributions. The same conclusions drawn from the plots and the corresponding tables can also be drawn from the mean score relative to the first distributions.

Mean (score) differences

It’s important to take standard deviations into account when comparing different benchmarking scores, because as Gernot Heiser points out:

- Don’t believe any effect that is less than a standard deviation

- Be highly suspicious if it is less than two standard deviations

Geometric mean

To summarize several mean scores the geometric mean is used. Using the normal (arithmetic) mean with these normalized values would be like lying, according to the paper http://www.cse.unsw.edu.au/~cs9242/15/papers/Fleming_Wallace_86.pdf by Fleming and Wallace:

Using the arithmetic mean to summarize normalized benchmark results leads to mistaken conclusions that can be avoided by using the preferred method: the geometric mean.

Arithmetic mean

To summarize several mean scores relative to the first the arithmetic mean is used. Although the mean scores are normalized, the arithmetic mean can be used, as the reference implementation doesn’t change between several categories.

Benchmarking results

The benchmarking results with lot’s of plots and tables can be found here:

- all optimizations: mean scores, mean scores relative to the first

- “-O”: mean scores, mean scores relative to the first

- “-O2”: mean scores, mean scores relative to the first

- “-Odph”: mean scores, mean scores relative to the first

- compile time: all optimizations and mean scores relative to the first

And the raw data (the YAML result files produced by temci) can be found here.

Conclusions

I discuss the benchmarking results by giving some of my conclusions and explaining them below (with plots and everything).

On compile times

- There are no significant differences between the different GHC versions and optimization levels (probably due to the fact, that the compiling takes only between 1 and 1.5 seconds)

Conclusion 1

As you can see in the following graphs, there’s really no (significant) difference between the different GHC versions. The following is the plot and the summary for the set of all mean scores for each GHC version using “-Odph”:

The right column is the important one: It shows that the standard deviation is around 30% for each single benchmarking. As every benchmarked program is only measured 20 times there is really no significance in the data. The results for the other optimization levels are nearly the same.

This result is a bit counter intuitive if you only see the plot above. But as this plot tells us only about the variance of the relative means over all categories and subprograms, the real deviation is hidden. If I only showed the plot, you would probably believe, that the newer GHC versions are faster than the older ones. It’s so easy to lie with statistics. Therefore: Distrust every (!) mean oder median value without at least a proper standard deviation (or variation) figure.

The following are the compile time mean score relative to the first distributions for all (tested) GHC versions and optimization levels (grouped by the latter). The table is omitted for brevity, as the standard deviations are not very different from the example above. The whole report can be seen here:

On execution times

- There’s no significant difference in the results between using “-Odph” and “-O2”

- There’s a significant difference between using “-Odph” (or “-O2”) and “-O” this is contrary to the Haskell documentation that tells us: At the moment,

-O2is unlikely to produce better code than-O. - The variance of the geometric mean over all individual mean scores is significantly with GHC 7.4.1 and GHC 7.6.1 than with the other versions (regarding “-Odph” and “-O2”).

- The variance plotted over time has the form of a parabola.

- The same can be sad about the geometric mean of all mean scores for each implementation.

- The differences between these geometric means aren’t significant (and mostly lower than one standard deviation)

- There seems to be a roughly significant improvement from 7.0.1 to 8.0.1 regarding „-O”

Conclusion 1 & 2

The following are the plots for the set of all mean scores per GHC version and optimization level (first “-O”, then “-O2” and “-Odph”):

It’s visually clear the last “-O2” and “-Odph” are almost identical and that they differ from “-O”. But as I wrote before, the tabular data with the actual standard deviations is important and is given in the following in the same order:

Another way to see this conclusion is by comparing the mean score relative to the first distributions for all combinations of GHC version and all optimization levels, grouped by the latter:

Yet another way to look at the benchmarked data is to simply subtract each measured value regarding the data of one optimization level from the corresponding value of the data of another one. This data can then be plotted in the same way as above (but with setting the divisor for the mean scores to one to allow comparison of the values). The following are first the plot summarizing “-O” – “-O2” and then the plot summarizing “-O2” – “-Odph”:

The conclusions should be obvious as mean over all differences as shown in the plots is many times higher with “-O” – “-O2” than with “-O2” – “-Odph” (just look at the x axis).

The difference between “-O” and “-O2” is (especially with older GHC versions) kind of significant, but with newer versions this effect diminishes. The following is the corresponding table:

For more plots, take a look at the actual game.py report.

Conclusion 3 & 4 & 5

It’s easy to see with this data that the variance of the geometric mean over all individual mean scores is significantly higher with GHC 7.4.1 and GHC 7.6.1 than with the other versions (regarding “-Odph” and “-O2”). And that the variance and means over time are in some sort of parabolic form.

The same conclusions can be drawn from the mean score relative to the first distributions:

Conclusion 6

The data also shows that for “-O2” and “-Odph” no difference between the mean scores of different GHC versions is really significant (or bigger than the maximum of standard deviations of both + the maximum of mean standard deviations).

Conclusion 7

As the plot of the beginning and the following table shows, there is a slightly significant difference between GHC 7 and GHC 8 (regarding “-O”), regarding the mean scores (first table) and the mean scores relative to the first (second table):

But it’s only slightly significant, as the maximum of the standard deviations between the two is around 13% and the difference is only around 10%. This difference is far higher (relative to the standard deviations) than with “-O2” or “-Odph”.

On execution times on specific program categories

The above just discussed the performance over all program categories (like binarytrees or fannkuchredux). The following are observations on specific program categories.

fannkuchredux

The fannkuchredux category is the category with longest running programs and can therefore show bigger (and more significant) differences. This category consists of has two benchmarked programs: 1 and 3

The first program consists of around 40 lines of code. It’s written in a good style (without fancy Haskell features), as far as I can tell.

The second program consists of around 90 lines of code. It uses the Control.Concurrent and the Foreign module to do some optimizations. The Haskell code is, contrary to the first program, pretty hard to understand for me.

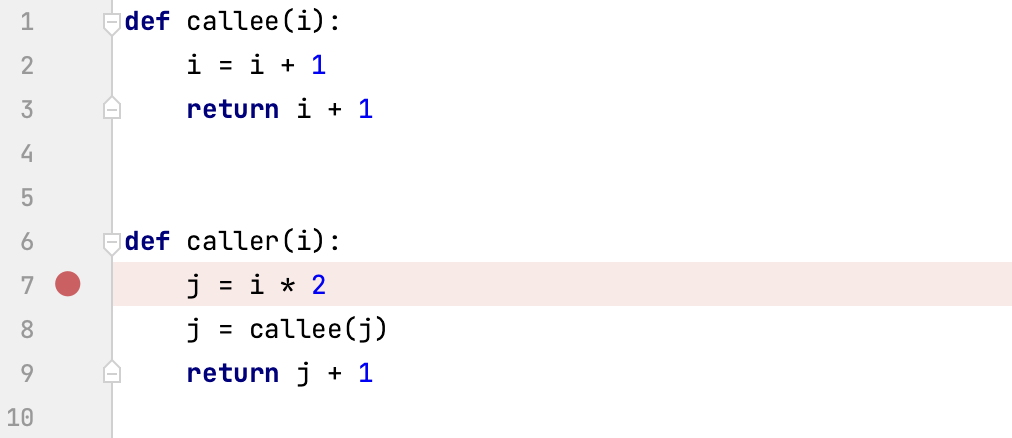

If we look at the performance of this two programs regarding the biggest input (12) and the “-Odph” optimization level (there is no big difference with other inputs or optimization levels), we see that GHC 7.0.1 is better with the second (optimized) program and 8.0.1 is better with first (simple) program (the plot shows the set of execution times in milliseconds for every GHC version):

This difference might suggest that

- the second program was optimized for a specific GHC version or

- the GHC got better in optimizing idiomatic Haskell programs (like the first)

- (maybe that’s the reason why there’s no significant difference between the GHC versions regarding all programs and inputs)

- that manually optimizing Haskell code is worthy subject: it reduces in this case the execution time from 5 – 9 minutes to 50 – 60 seconds.

Although the significance is not high (and its some sort of reading tea leaves at this significance levels), looking at the mean score for the upper half and the lower half of programs per category regarding their entropy supports the second conclusion.

The following two tables show the mean score distribution properties for this two halves for the optimization level “-O2” (its the nearly the same for “-Odph”, but different from “-O”). The entropy of a program is the length of its gnu zipped source code. Programs with a lower entropy tend to be simpler and shorter. If a category has an uneven number of programs, then one program belongs both to the lower and to the upper half.

And the same for mean scores relative to the first:

| mean score (mean(mean / mean of first impl)) | mean score std (std(mean / mean of first impl)) | mean rel std (mean(std / mean)) | |

|---|---|---|---|

| 'ghc-7.0.1' | '100.00000%' | '0.00000%' | '1.94797%' |

| 'ghc-7.2.1' | '104.94734%' | '8.91630%' | '1.74543%' |

| 'ghc-7.4.1' | '118.73058%' | '41.94929%' | '2.35060%' |

| 'ghc-7.6.1' | '109.92789%' | '15.23370%' | '1.99001%' |

| 'ghc-7.8.1' | '98.46269%' | '12.41984%' | '2.64147%' |

| 'ghc-7.10.1' | '96.59251%' | '15.21649%' | '2.68550%' |

| 'ghc-8.0.1' | '96.59899%' | '13.66185%' | '2.96005%' |

| mean score (mean(mean / mean of first impl)) | mean score std (std(mean / mean of first impl)) | mean rel std (mean(std / mean)) | |

|---|---|---|---|

| 'ghc-7.0.1' | '100.00000%' | '0.00000%' | '2.15002%' |

| 'ghc-7.2.1' | '103.79046%' | '6.09583%' | '2.16509%' |

| 'ghc-7.4.1' | '116.47640%' | '41.46459%' | '2.52321%' |

| 'ghc-7.6.1' | '107.60777%' | '11.15838%' | '2.30860%' |

| 'ghc-7.8.1' | '101.52571%' | '12.69831%' | '2.79568%' |

| 'ghc-7.10.1' | '100.79559%' | '14.00779%' | '2.75813%' |

| 'ghc-8.0.1' | '101.45382%' | '12.58347%' | '3.15372%' |

Important note: I should emphasize one more time that this is like reading tea leaves and only suggest an area to investigate further.

Further work

- Use the assembler randomization feature of temci to see whether or not I just observed some cache effects (easy, costs only computing time on my benchmark computer and slightly modifying the game.py script)

- Fix the other benchmarks game code that I didn’t benchmark as it contained bugs

- Benchmark some older GHC versions

Thanks to Joachim Breitner for the idea for this blog post and his Haskell knowledge.

It could be more modular if you decouple the running the benchmark with various flags part with the statistical analysis part, which also gives the opportunity for other to use other statistical tools instead of python.

It’s already decoupled in a way, that temci produces several YAML result files that contain the raw data with some attributes (like category and implementation). The game.py code itself doesn’t do any of the benchmarking but produces a config file for temci.

I’m going to add the raw YAML file to the article.