Welcome back to my series on ebpf. In the last blog post, we learned how to auto-layout struct members and auto-generate BPFStructTypes for annotated Java records. We’re going to extend this work today.

This is a rather short blog post, but the implementation and fixing all the bugs took far more time then expected.

Generating Struct Definitions

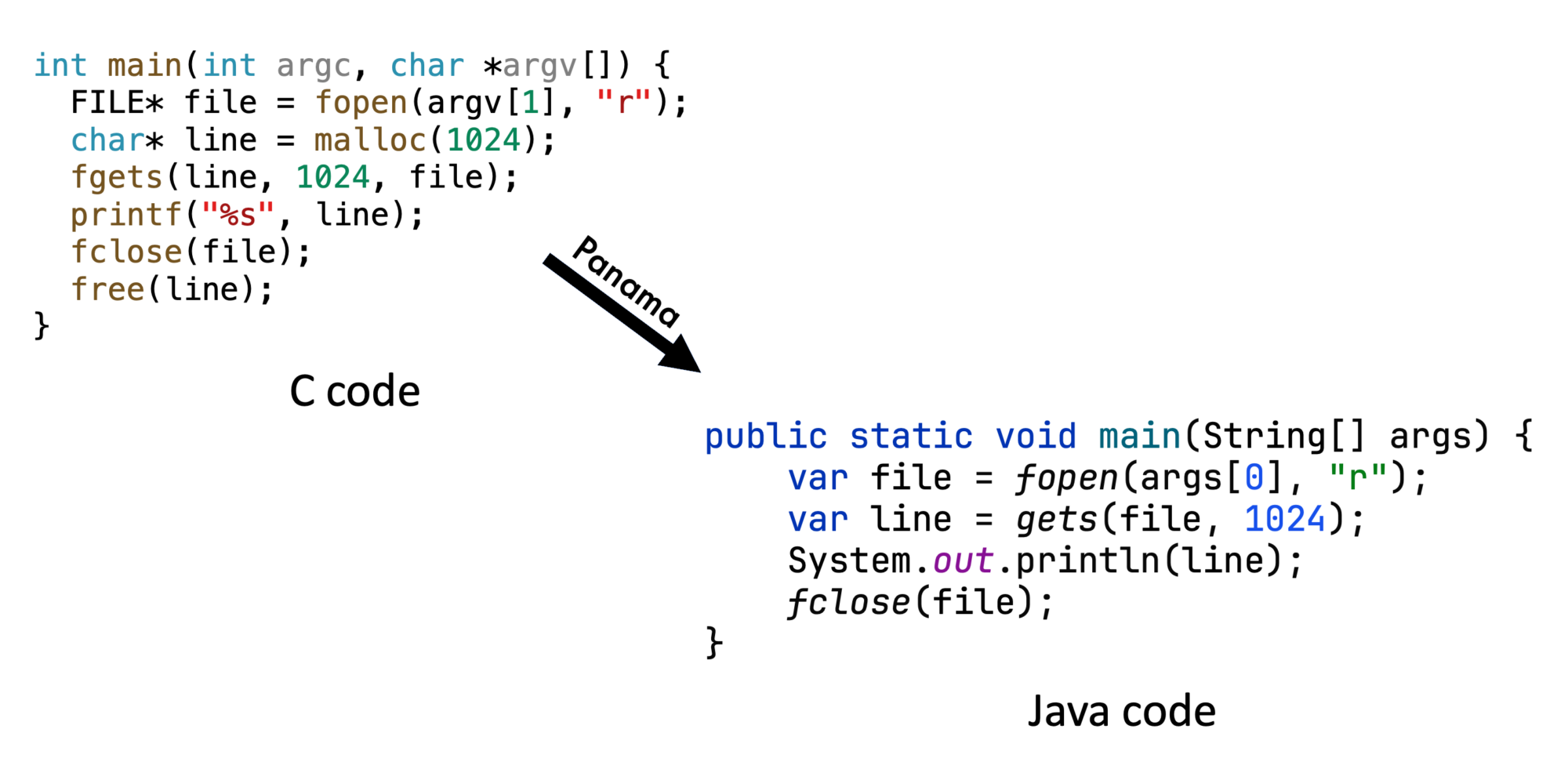

We saw in the last blog post how powerful Java annotation processing is for generating Java code; this week, we’ll tackle the generation of C code: In the previous blog post, we still had to write the C struct and map definitions ourselves, but writing

struct event {

u32 e_pid;

char e_filename[FILE_NAME_LEN];

char e_comm[TASK_COMM_LEN];

};

when we already specified the data type properly in Java

record Event(@Unsigned int pid,

@Size(FILE_NAME_LEN) String filename,

@Size(TASK_COMM_LEN) String comm) {}

seems to be a great place to improve our annotation processor. There are only two problems:

- The annotation processor needs to know about BPFTypes, so we have to move them in there. But the BPFTypes use the Panama API which requires the –enable-preview flag in JDK 21, making it unusable in Java 21. So we have to move the whole library over to JDK 22, as this version includes Panama.

- There is no C code generation library like JavaPoet for generating Java code.

Regarding the first problem: Moving to JDK 22 is quite easy, the only changes I had to make are listed in this gist. The only major problem was getting the Lima VM to use a current JDK 22. In the end I resorted to just using sdkman, you can a look into the install.sh script to see how I did it.

Regarding the second problem: We can reduce the problem of generating C code into two steps:

- Create an Abstract Syntax Tree (AST) for C

- Create a pretty printer for this AST

To create an AST I resorted to an ANSI C grammar for inspiration. Each AST node implements the following interface:

public interface CAST {

List<? extends CAST> children();

Statement toStatement();

/** Generate pretty printed code */

default String toPrettyString() {

return toPrettyString("", " ");

}

String toPrettyString(String indent, String increment);

}

We can then create a hierarchy of extending interfaces (PrimaryExpression, …) and implementing records (ConstantExpression, …). You can find the whole C AST on GitHub.

This leads us to an annotation processor that can add automatically insert struct definitions into the C code of our eBPF program, reducing the amount of hard-to-debug errors as it is guaranteed that both the Java specification and C representation of every type are compatible.

But can we do more with annotation processing?

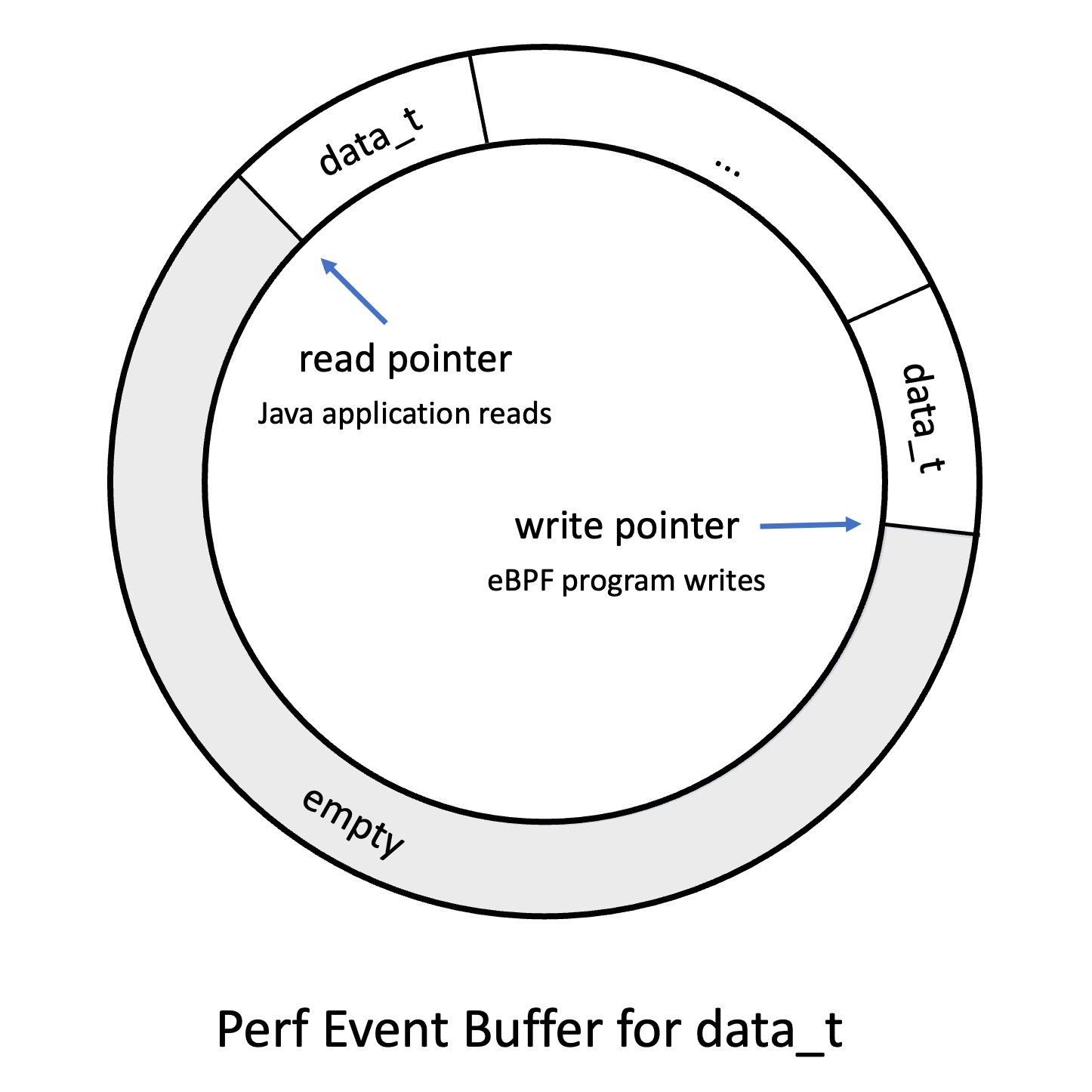

Generating Map Definitions

There is another definition that we can auto-generate: Map definitions like

struct

{

__uint (type, BPF_MAP_TYPE_RINGBUF);

__uint (max_entries, 256 * 4096);

} rb SEC (".maps");

which define maps like hash maps and ring buffers that allow the communication between user- and kernel-space.

With a little of annotation processor, we can define the same ring buffer from above in Java:

@BPFMapDefinition(maxEntries = 256 * 4096) BPFRingBuffer<Event> rb;

Our annotation-processor then turns this into the C definition from above and inserts code into the constructor of the Java program that properly initializes rb.

But how does the processor know what code it should generate? By parsing the BPFMapClass annotation on BPFRingBuffer (and any other class). This annotation contains the templates for both the C and the Java code:

@BPFMapClass(

cTemplate = """

struct {

__uint (type, BPF_MAP_TYPE_RINGBUF);

__uint (max_entries, $maxEntries);

} $field SEC(".maps");

""",

javaTemplate = """

new $class<>($fd, $b1)

""")

public class BPFRingBuffer<E> extends BPFMap {

}

Here $field is the Java field name, $maxEntries the value in the BPFMapDefinition annotation and $class the name of the Java class. $cX, $bX, $jX give the C type name, BPFType and Java class names related to the Xth type parameter.

Ring Buffer Sample Program

When we combine all this together we can have a much simpler ring buffer sample program (see TypeProcessingSample2 on GitHub):

@BPF(license = "GPL")

public abstract class TypeProcessingSample2 extends BPFProgram {

private static final int FILE_NAME_LEN = 256;

private static final int TASK_COMM_LEN = 16;

@Type(name = "event")

record Event(

@Unsigned int pid,

@Size(FILE_NAME_LEN) String filename,

@Size(TASK_COMM_LEN) String comm) {}

@BPFMapDefinition(maxEntries = 256 * 4096)

BPFRingBuffer<Event> rb;

static final String EBPF_PROGRAM = """

#include "vmlinux.h"

#include <bpf/bpf_helpers.h>

#include <bpf/bpf_tracing.h>

#include <string.h>

// This is where the struct and map

// definitions are inserted automatically

SEC ("kprobe/do_sys_openat2")

int kprobe__do_sys_openat2 (struct pt_regs *ctx)

{

// ... // as before

}

""";

public static void main(String[] args) {

try (TypeProcessingSample2 program =

BPFProgram.load(TypeProcessingSample2.class)) {

program.autoAttachProgram(

program.getProgramByName("kprobe__do_sys_openat2"));

// we can use the rb ring buffer directly

// but have to set the call back

program.rb.setCallback((buffer, event) -> {

System.out.printf(

"do_sys_openat2 called by:%s " +

"file:%s pid:%d\n",

event.comm(), event.filename(),

event.pid());

});

while (true) {

// consumes all registered ring buffers

program.consumeAndThrow();

}

}

}

}

There are two other things missing in the C code that are also auto-generated: Constant defining macros and the license definition. Macros are generated for all static final fields in the program class that are defined at compile time.

Conclusion

Using annotation processing allows to reduce the amount of C code we have to write and reduces errors by generating all definitions from the Java code. This simplifies writing eBPF applications.

See you in two weeks when we tackle global variables, moving closer and closer to making hello-ebpf’s bpf support able to write a small firewall.

This will also be the topic of a talk that I submitted together with Mohammed Aboullaite to several conferences for autumn.

Addendum

The more I work on writing my own ebpf library, the more I value the effort that the developers of other libraries like bcc, the Go or Rust ebpf libraries put it in to create usable libraries. They do this despite the lack of of proper documentation. A simple example is the deattaching of attached ebpf programs: There are multiple (undocumented) methods in libbpf that might be suitable; bpf_program__unload, bpf_link__detach, bpf_link__destroy, bpf_prog_detach, but only bpf_link__destroy properly detached a program.

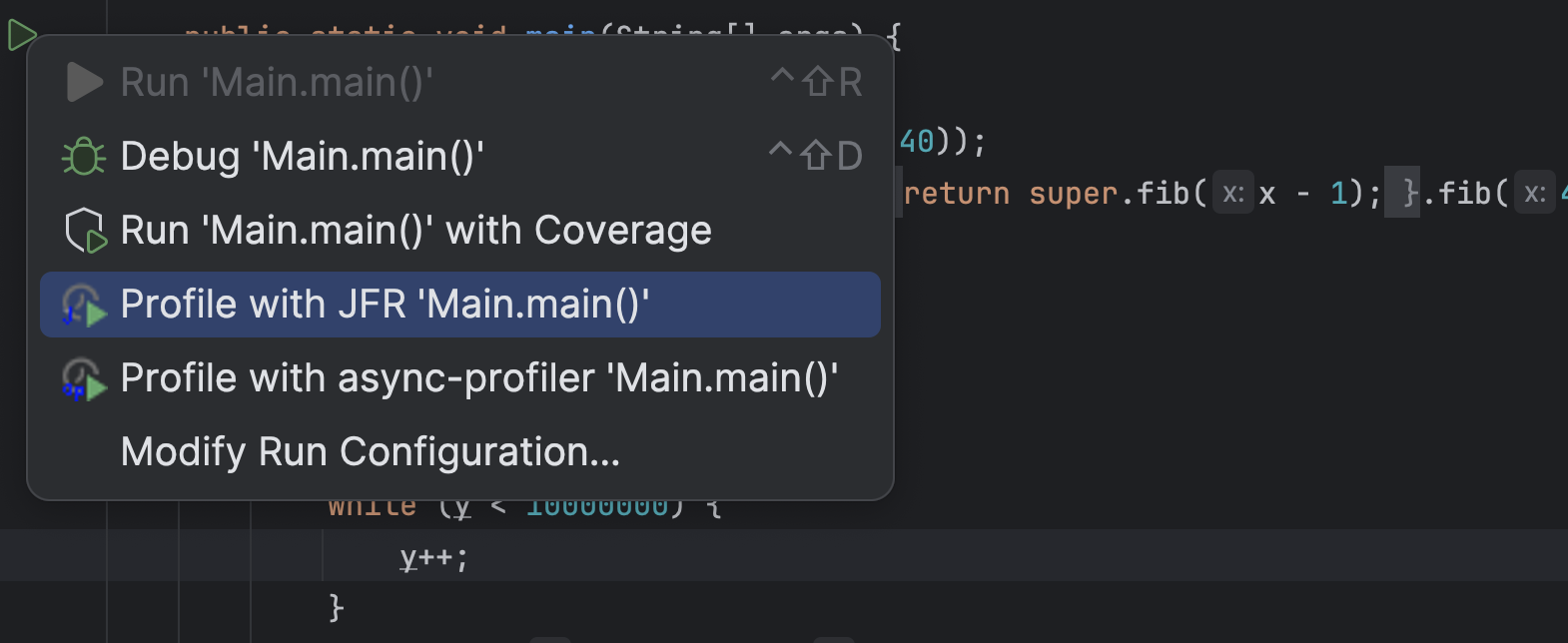

This article is part of my work in the SapMachine team at SAP, making profiling and debugging easier for everyone.